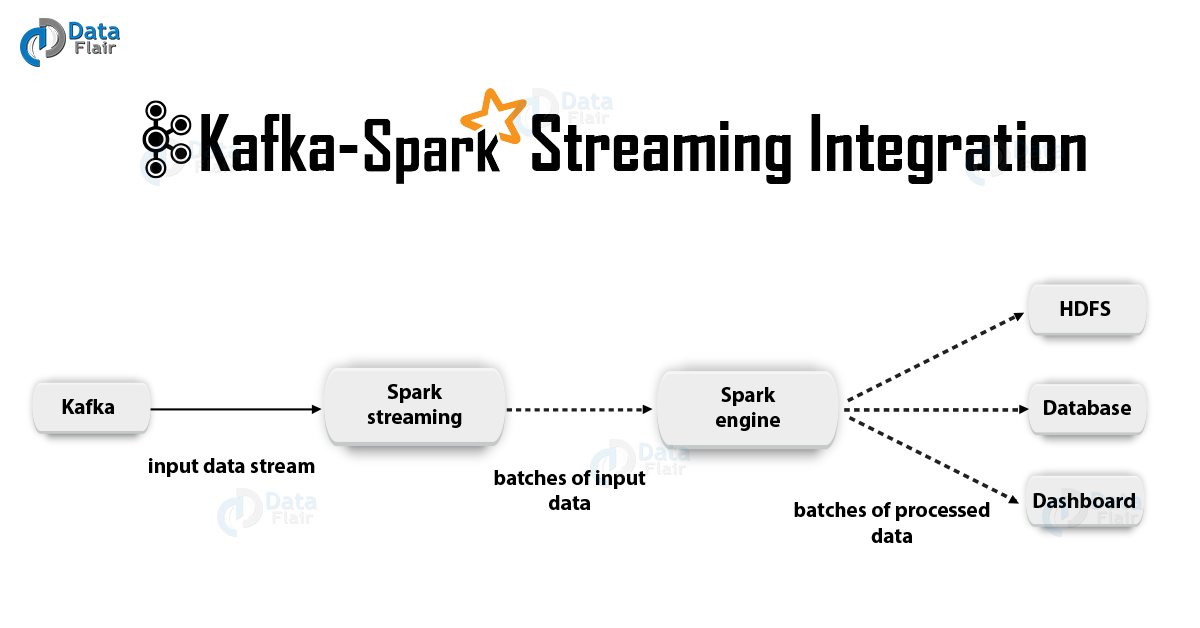

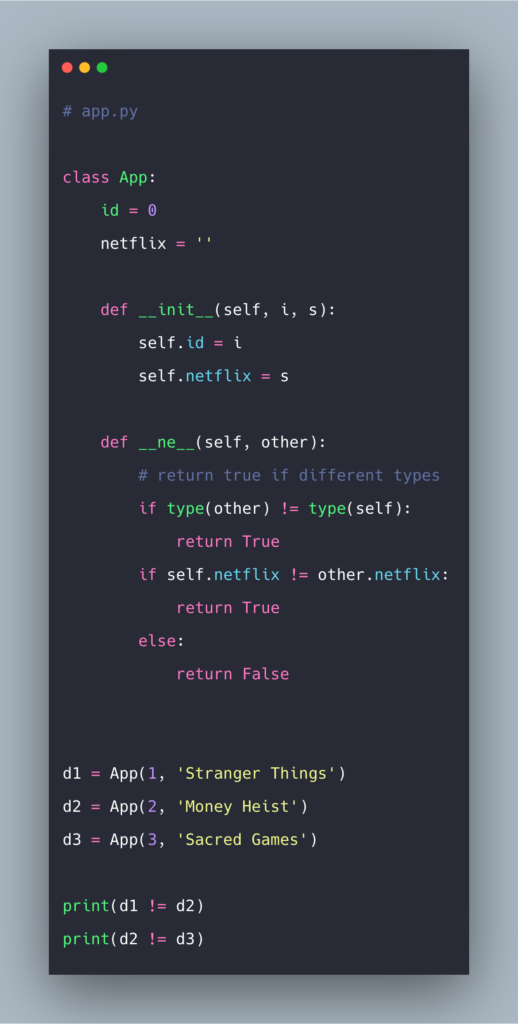

show Read a csv file and store into MongoDB : spark SparkSession. Python Spark Shell - PySpark is an interactive shell through which we can access Spark's API using Python. Watching the short on getting the code from GitHub lecture, I heard the best summary of branches ever. option ('', 'mongodb://mongodb:27017/spark.output').

Thanks for the content Michael Kennedy! - Shay E.Īre you kidding? By the end of first Mongo Quickstart coding example I was checking out colorama which was hardly mentioned, if at all. Seeing how you designed the different parts of the application while explaining the logic behind, was great. The introduction to robo 3t by itself was worth it for me, amazing tool I didn't know before. I’ve populated the cluster with the sample data.

For Databricks Runtime 5.5 LTS and 6.x, enter :mongo-spark-connector2.11.

Just finished going through this, a great intro to mongodb and nosql in general. I am trying to pass data back and forth via Python to a free MongoDB collection that I have created with my account. Enter the Mongo DB Connector for Spark package value into the Coordinates field based on your Databricks Runtime version: For Databricks Runtime 7.0.0 and above, enter :mongo-spark-connector2.12:3.0.0. Looking forward to working with Python and even doing some ML. Just wanted to let you know that I got a job with a startup. Michael, you were born to teach man! - William H. Test pyspark: Example using aggregate pipeline pyspark -jars /mongo-spark-connector_2.11-2.2.1.jar,/mongodb-driver-core-3.4.2.jar,/bson-3.4.2.jar,/mongo-java-driver-3.4.2.Spent the morning with Talk Python's course on Python and MongoDB. I had to inspect the pom.xml on Maven Central of the version of mongo-spark-connector I needed to see which version of mongo-java-driver I needed and then downloaded the corresponding mongodb-driver-core and bson jars subsequently.įinally test: Scala spark-shell -conf -conf -jars /mongo-spark-connector_2.11-2.2.1.jar,/mongodb-driver-core-3.4.2.jar,/bson-3.4.2.jar,/mongo-java-driver-3.4.2.jar Using the correct Spark, Scala versions with the correct mongo-spark-connector jar version is obviously key here including all the correct versions of the mongodb-driver-core, bson and mongo-java-driver jars. What worked for me in the end was the following configuration (Setting up or configuring your mongo-spark-connector):

0 kommentar(er)

0 kommentar(er)